You might have noticed that execution plan changed in CBO , specially

in following case

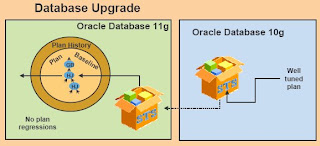

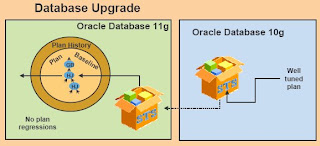

- Database Upgrade

- Database / Schema Stats collection

- Change in environment ( LinkUnix to Linux Migration )

- Change in data

Case: You want to upgrade database from 10g to 11g and this change can cause regressions in SQL performance,and fixing them manually can be difficult and time consuming. Sql tuning can be used but, this is a reactive mechanism and cannot guarantee stable performance when drastic changes happen to the system. SQL tuning can only resolve performance issues after they have occurred and are identified. For example, a SQL statement may become a high-load statement due to a plan change, but this cannot be resolved by SQL tuning until after the plan change occurs.

Solution: Oracle 11g new features SQL plan management (SPM) records and evaluates the execution plans of SQL Statements over time, and builds SQL plan baselines composed of a set of existing plans known to be efficient. The SQL plan baselines are then used to preserve performance of corresponding SQL statements, regardless of changes occurring in the system.

Note:- Before upgrading database to 11g (11.1.0.6) , i have collect SQL Tuning set (STS) for 7 Day using "capture_cursor_cache_sqlset". By assuming that in 7 day all SQL stas will collected in STS. After that upgraded database to 11g ( from 10g 10.2.0.3) , i have used this STS to compare execution plan from 11g. Please check

"SQL Performance Analyzer Part - 2"for steps to transfer STS one database to other database

1) Capturing SQL Plan Baselines

· Automatic Plan Capture ( 11g Only )

· Manual Plan Capture ( 10g and 11g )

2) Make Changes Upgrade Database / collect stats / Migrate Database to Linux

3) Upload SQL Plan Baseline

4) Enable the use of SQL plan baselines

5) Evolving SQL Plan Baselines

1) Capturing SQL Plan Baselines in oracle 10g before upgrading to 11g

a) Automatic Plan Capture(11g only)

When OPTIMIZER_CAPTURE_SQL_PLAN_BASELINES set true , then database automatically creates and maintains the plan history for SQL statements using information provided by the optimizer.

>

In init.ora file OPTIMIZER_CAPTURE_SQL_PLAN_BASELINES = true OR SQL>Alter system set OPTIMIZER_CAPTURE_SQL_PLAN_BASELINES = true; |

Note : - Above automatic plan capture only work on 11g , if you want to capture plan in 10g , then check section “Manual Plan Capture”

b) Manual Plan Capture ( 10g and 11g )

Following script will capture sql plan in SQL tuning set for 7 Day ( this script will run for 7 day , you can change time according to your need )

exec dbms_sqltune.drop_sqlset('STS_RAG_CMUL');

exec dbms_sqltune.create_sqlset('STS_RAG_CMUL'); DECLARE

sts_cmul VARCHAR2(30) := 'STS_RAG_CMUL';

BEGIN dbms_sqltune.capture_cursor_cache_sqlset(sts_cmul,

604800,

1,

'MERGE',

dbms_sqltune.MODE_ACCUMULATE_STATS

); END;

|

capture_cursor_cache_sqlset : The procedure captures a workload from the cursor cache into a SQL tuning set, polling the cache multiple times over a time period and updating the workload data stored there. It can execute over as long a period as required to capture an entire system workload.

- time_limit : 604800 ( The total amount of time, in seconds, to execute , 7 day = 7 * 24 * 60 * 60 = 604800 )

- repeat_interval : 1 ( The amount of time, in seconds, to pause between sampling )

- capture_option During capture, either insert new statements, update existing ones, or both.

- 'INSERT'

- 'UPDATE',

- 'MERGE'

- capture_mode capture Option (UPDATE and MERGE capture options).

- MODE_REPLACE_OLD_STATS - Replace statistics when the number of executions

seen is greater than that stored in the SQL tuning set

MODE_ACCUMULATE_STATS - Add new values to current values for SQL we already store. Note that this mode detects if a statement has been aged out, so the final value for a statistics will be the sum of the statistics of all cursors that statement existed under.

The CAPTURE_CURSOR_CACHE_SQLSET function enables the capture of the full system workload by repeatedly polling the cursor cache over a specified interval. This function is a lot more efficient than repeatedly using the SELECT_CURSOR_CACHE and LOAD_SQLSET procedures to capture the cursor cache over an extended period of time. This function effectively captures the entire workload, as SQL Profiles opposed to the AWR—which only captures the workload of high-load SQL statements or the LOAD_SQLSET procedure, which accesses the data source only once.

2) Make Changes e.g. Upgrade Database / collect stats / Migrate Database to Linux Collect stats

exec dbms_stats.set_param('ESTIMATE_PERCENT','100'); exec dbms_stats.set_param('method_opt','FOR ALL COLUMNS SIZE 254'); exec dbms_stats.Gather_Database_Stats;

|

3) Upload SQL Plan Baseline

If you have collected stats manually in SQL tuning sets ( STS) , then you need to

upload baseline from STS

-- Upload plan manually using dbms_spm

-- variable pls number; exec :pls := dbms_spm.load_plans_from_sqlset(sqlset_name=>'STS_RAG_CMUL',-

basic_filter=>'parsing_schema_name like ''APPS'' and plan_hash_value!=0', -

fixed=>'NO',commit_rows=>1000);

|

4) Enable the use of SQL plan baselines

To enable the use of SQL plan baselines, set the OPTIMIZER_USE_SQL_PLAN_BASELINES

initialization parameter to TRUE. By default, this parameter is set to TRUE.

--In init.ora file

-- OPTIMIZER_USE_SQL_PLAN_BASELINES = TRUE OR

SQL> alter system set OPTIMIZER_USE_SQL_PLAN_BASELINES= true; |

5) Evolving SQL Plan Baselines

I have captured 10g plans in STS and after upgrading database to 11g uploaded those plans ,using dbms_spm.load_plans_from_sqlset. After uploading plans manually , I have set

OPTIMIZER_CAPTURE_SQL_PLAN_BASELINES to true.

--Check not accepted Plans

-- SQL> select sql_handle, plan_name, enabled, accepted, fixed

from dba_sql_plan_baselines

Where ACCEPTED='NO' and

PARSING_SCHEMA_NAME like 'APPS'; SQL_HANDLE PLAN_NAME ENABLED ACCEPTED FIXED

--------------------- ------------------------- ------- --------- -------

SYS_SQL_9295397103ae5ebe SYS_SQL_PLAN_74720f16b0fcefa7 YES NO NO

SYS_SQL_9295397103ad3eba SYS_SQL_PLAN_03ad3ebaa086802f YES NO NO

SYS_SQL_434ef30d9da6a29b SYS_SQL_PLAN_9da6a29b1f6e321d YES NO NO

SYS_SQL_13836d6b3da62bbb SYS_SQL_PLAN_3da62bbbe5990995 YES NO NO

.......................................... ....................................

40 rows selected.

|

-- Displaying SQL Plan Baselines

-- SQL> select * from table(dbms_xplan.display_sql_plan_baseline(sql_handle=>'SYS_SQL_13836d6b3da62bbb',format=>'basic')); --------------------------------------------------------------------------------

SQL handle: SYS_SQL_13836d6b3da62bbb

SQL text: ( I have removed SQL text and changed table name in below execution plan)

--------------------------------------------------------------------------------

--------------------------------------------------------------------------------

Plan name: SYS_SQL_PLAN_3da62bbb91266099

Enabled: YES Fixed: NO Accepted: YES Origin: MANUAL-LOAD

-------------------------------------------------------------------------------- Plan hash value: 2877140902

-------------------------------------------------

Id Operation Name

-------------------------------------------------

0 SELECT STATEMENT

1 SORT ORDER BY

2 HASH JOIN SEMI

3 TABLE ACCESS FULL PROD

4 INDEX FULL SCAN COMP_PROD

------------------------------------------------- --------------------------------------------------------------------------------

Plan name: SYS_SQL_PLAN_3da62bbbe5990995

Enabled: YES Fixed: NO Accepted: NO Origin: AUTO-CAPTURE

-------------------------------------------------------------------------------- Plan hash value: 3245492848 -----------------------------------------

Id Operation Name

-----------------------------------------

0 SELECT STATEMENT

1 SORT ORDER BY

2 HASH JOIN SEMI

3 TABLE ACCESS FULL PROD

4 TABLE ACCESS FULL COMP

----------------------------------------- 42 rows selected. SQL> |

Evolving Plans with pls/sql function DBMS_SPM.EVOLVE_SQL_PLAN_BASELIN

--Evolving Plans With DBMS_SPM.EVOLVE_SQL_PLAN_BASELINE

-- SQL> DECLARE

report clob;

BEGIN report := DBMS_SPM.EVOLVE_SQL_PLAN_BASELINE(

sql_handle => 'SYS_SQL_13836d6b3da62bbb');

DBMS_OUTPUT.PUT_LINE(report);

END;

/ -------------------------------------------------------------------------------

Evolve SQL Plan Baseline Report

-------------------------------------------------------------------------------

Inputs:

-------

SQL_HANDLE =SYS_SQL_13836d6b3da62bbb

PLAN_NAME =

TIME_LIMIT = DBMS_SPM.AUTO_LIMIT

VERIFY = YES

COMMIT = YES Plan: SYS_SQL_PLAN_3da62bbbe5990995

-----------------------------------

Plan was verified: Time used .06 seconds.

Failed performance criterion: Compound improvement ratio <= .3. Baseline Plan Test Plan Improv. Ratio ------------- --------- ------------- Execution Status: COMPLETE COMPLETE

Rows Processed: 1460 1460

Elapsed Time(ms): 10 10 1

CPU Time(ms): 9 9 1

Buffer Gets: 84 286 .29

Disk Reads: 0 0

Direct Writes: 0 0

Fetches: 0 0

Executions: 1 1

-------------------------------------------------------------------------------

Report Summary

------------------------------------------------------------------------------- Number of SQL plan baselines verified: 1.

Number of SQL plan baselines evolved: 0.

PL/SQL procedure successfully completed.

SQL> |

Just for demo purpose , lets accept plan 2 ('SYS_SQL_PLAN_3da62bbbe5990995')

-- Acccept plan 2 ('SYS_SQL_PLAN_3da62bbbe5990995') and Fixed it

--

variable cnt number;

exec :cnt := dbms_spm.alter_sql_plan_baseline(sql_handle =>

'SYS_SQL_13836d6b3da62bbb', -

plan_name => 'SYS_SQL_PLAN_3da62bbbe5990995', -

attribute_name => 'ACCEPTED', attribute_value => 'YES'); SQL> exec :cnt := dbms_spm.alter_sql_plan_baseline(sql_handle =>

'SYS_SQL_13836d6b3da62bbb', -

plan_name => 'SYS_SQL_PLAN_3da62bbbe5990995', -

attribute_name => 'ACCEPTED', attribute_value => 'YES'); PL/SQL procedure successfully completed.

SQL> -- Same pl/sql function used to fix plans

-- Optimizer always picked FIXED plans

SQL> exec :cnt := dbms_spm.alter_sql_plan_baseline(sql_handle =>

'SYS_SQL_13836d6b3da62bbb', -

plan_name => 'SYS_SQL_PLAN_3da62bbbe5990995', -

attribute_name => 'FIXED', attribute_value => 'YES'); PL/SQL procedure successfully completed. SQL> - - Check status of plans

-- SQL> select sql_handle, plan_name, enabled, accepted, fixed from dba_sql_plan_baselines

2 Where FIXED='YES' and PARSING_SCHEMA_NAME like ''; SQL_HANDLE PLAN_NAME ENABLED ACCEPTED FIXED

----------------------------- ---------------------------- -------------- --------

SYS_SQL_13836d6b3da62bbb SYS_SQL_PLAN_3da62bbbe5990995 YES YES YES

SQL>

> |

>

-- Displaying SQL Plan Baselines after changes

-- SQL> select * from table(dbms_xplan.display_sql_plan_baseline(sql_handle=>'SYS_SQL_13836d6b3da62bbb',format=>'basic')); --------------------------------------------------------------------------------

SQL handle: SYS_SQL_13836d6b3da62bbb

SQL text: ( I have removed SQL text and changed table name in below execution plan)

--------------------------------------------------------------------------------

--------------------------------------------------------------------------------

Plan name: SYS_SQL_PLAN_3da62bbb91266099

Enabled: YES Fixed: NO Accepted: YES Origin: MANUAL-LOAD

-------------------------------------------------------------------------------- Plan hash value: 2877140902 -------------------------------------------------

Id Operation Name

-------------------------------------------------

0 SELECT STATEMENT

1 SORT ORDER BY

2 HASH JOIN SEMI

3 TABLE ACCESS FULL PROD

4 INDEX FULL SCAN COMP_PROD

-------------------------------------------------

--------------------------------------------------------------------------------

Plan name: SYS_SQL_PLAN_3da62bbbe5990995

Enabled: YES Fixed: YES Accepted: YES Origin: AUTO-CAPTURE

-------------------------------------------------------------------------------- Plan hash value: 3245492848 -----------------------------------------

Id Operation Name

-----------------------------------------

0 SELECT STATEMENT

1 SORT ORDER BY

2 HASH JOIN SEMI

3 TABLE ACCESS FULL PROD

4 TABLE ACCESS FULL COMP

-----------------------------------------

42 rows selected. |

Reference

SQL Plan Management Chapter 15